Introduction

Cardiovascular disease remains one of the leading causes of mortality and morbidity worldwide, with atherosclerosis serving as its primary pathological basis. Early detection of atherosclerosis and precise risk stratification are critical for preventing cardiovascular events and reducing patient mortality [1–3]. However, currently used cardiovascular risk assessment methods in clinical practice (such as the Framingham score and the ASCVD 10-year risk calculator) mainly integrate clinical and laboratory indicators. While they perform well at the population level, they do not fully utilize plaque imaging phenotypes and still rely on manual interpretation. Therefore, both accuracy and efficiency have room for improvement, making it difficult to meet the demands for rapid and individualized risk assessment [4, 5]. Consequently, developing automated and intelligent assessment techniques for atherosclerosis risk has become an urgent challenge in cardiovascular disease management [6, 7].

In recent years, deep learning (DL) has emerged as a cutting-edge technology in artificial intelligence, achieving significant breakthroughs in medical image analysis and data mining [8]. Convolutional neural networks (CNNs), in particular, have demonstrated exceptional performance in image segmentation and classification, offering innovative solutions for the precise identification and quantification of pathological lesions in medical images [9]. Additionally, attention mechanisms have further improved model performance by emphasizing critical lesion regions, especially in the analysis of complex images and multimodal data [10–12]. These rapid technological advances open new avenues for addressing the current limitations in atherosclerosis risk evaluation [13].

DL has already been applied to the diagnosis and prediction of cardiovascular diseases, but it still faces challenges in the specific area of atherosclerosis risk stratification, including multimodal data fusion, interpretability, and the accurate identification of high-risk lesions [14–16]. First, the integration and synergistic analysis of multimodal data – including computed tomography angiography (CTA), ultrasound imaging, and clinical information – remain in an immature stage [17]. Second, the lack of interpretability and clinical usability of these models limits their practical application [18]. Finally, current approaches still struggle to accurately identify high-risk lesions, which is essential for meeting clinical demands for precise risk assessment [19].

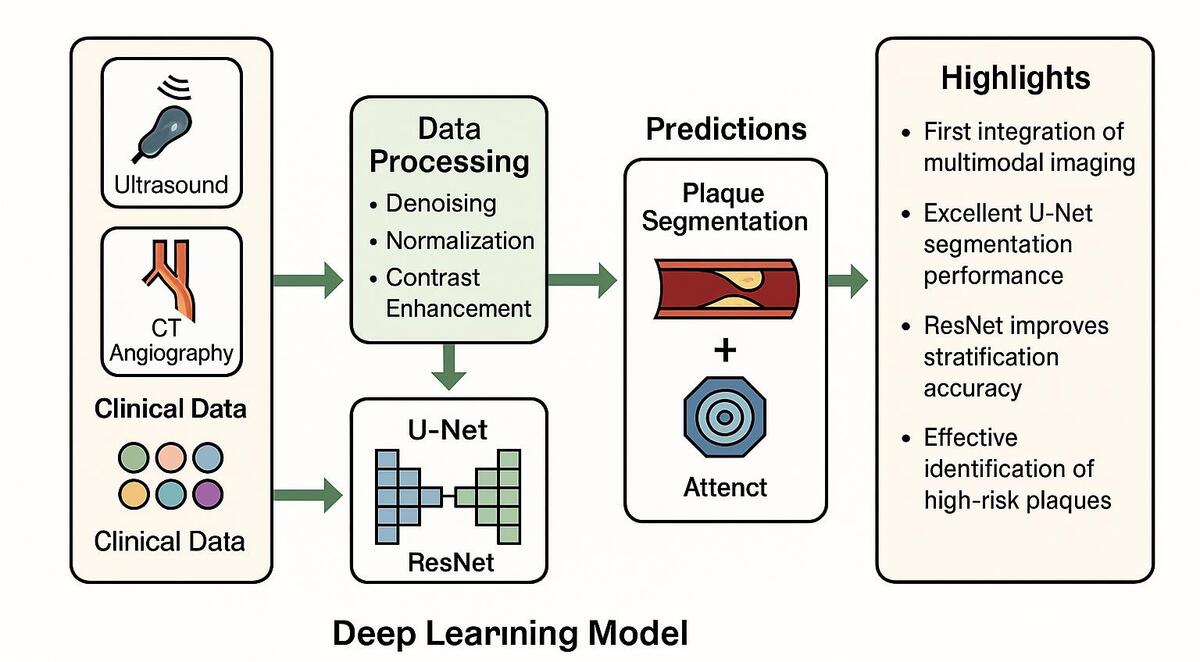

Against this backdrop, the present study proposes a DL-based risk stratification model for atherosclerosis. By integrating U-Net and Residual Network (ResNet) frameworks, this research aims to achieve high-precision segmentation of lesions and accurate risk classification. The incorporation of an attention mechanism further enhances the model’s ability to capture key pathological features, thereby improving the identification of high-risk lesions. In this study, we further integrated multimodal medical imaging data with clinical features and designed a model training and validation pipeline characterized by high scalability and stability. This pipeline lays a solid foundation for the practical implementation of the model in real-world clinical scenarios.

The primary objective of this study is to develop an efficient and precise tool for atherosclerosis risk stratification that provides reliable decision support for clinicians. Through comprehensive analysis of multimodal data, the model is expected to furnish a scientific basis for personalized treatment strategies, ultimately optimizing early prevention and therapeutic interventions for cardiovascular disease. We anticipate that this work will not only advance the application of DL in medical image analysis but also contribute to improved prognostic outcomes for patients with cardiovascular disease.

Material and methods

Data collection and quality control

All data used in this study were obtained from publicly accessible and de-identified multimodal medical imaging databases, including ultrasound images and CTA. All datasets contained no personally identifiable information, and the institutional ethics committee confirmed that no additional informed consent or IRB approval was required for this research. All imaging data underwent a rigorous preprocessing pipeline to ensure the stability and generalizability of model training. However, the study populations included in public databases often follow specific inclusion and exclusion criteria, which may underestimate certain high-risk or rare phenotypes, leading to selection bias and partially limiting the model’s generalizability to real-world populations.

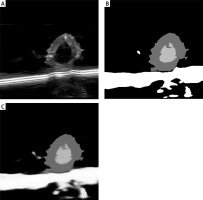

To enhance data quality, images that were blurry, low-resolution, or lacked critical information were excluded. Furthermore, all images were resampled to a standardized resolution to ensure consistency across the dataset. Initial denoising and contrast enhancement were performed to meet the requirements of subsequent DL model training (Supplementary Figure S1).

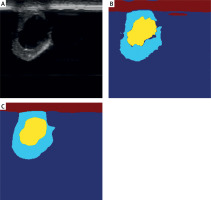

Image preprocessing and annotation

During the preprocessing phase, various data augmentation techniques – including random rotation, scaling, and cropping – were applied to enhance image quality and improve the model’s generalizability across different scenarios. Image pixel values were normalized using a standardization method to ensure consistency in feature distribution. Annotation was performed collaboratively by multiple experienced radiologists, who focused on marking atherosclerotic plaque lesions, categorizing lesion types, and documenting distribution characteristics. A semi-automated segmentation tool based on a DL model assisted the experts in improving both efficiency and annotation quality. The annotated data were subjected to cross-validation to ensure the accuracy of lesion boundaries and features (Supplementary Figure S2).

DL model design

This study employed an ensemble DL framework integrating multiple models: U-Net was used for high-precision segmentation of lesions, ResNet was used for feature extraction and classification, and an attention mechanism was used to enhance the identification of critical pathological regions. U-Net’s encoder-decoder architecture facilitates precise pixel-level segmentation, capturing detailed plaque features; ResNet extracts high-level imaging features through multiple convolutional layers to assess lesion severity. The self-attention module focuses on regions likely associated with high-risk events, thereby increasing the model’s sensitivity to lesion characteristics. Additionally, the model fuses imaging features with clinical data for integrated multimodal analysis (Supplementary Figure S3).

Data partitioning and model training

The dataset was randomly divided into training, validation, and test sets in an 8 : 1 : 1 ratio for model training, hyperparameter tuning, and performance evaluation, respectively. To mitigate overfitting, 5-fold cross-validation was implemented during training, with a random subset of data designated as the validation set in each fold (Supplementary Figure S4). The model was optimized using cross-entropy loss and the Adaptive Moment Estimation (Adam) optimizer with an initial learning rate of 0.001, which was dynamically adjusted during training to accelerate convergence. An early stopping mechanism was incorporated to halt training if the validation loss failed to improve significantly over several epochs. Each training session consisted of 20 epochs, and weight decay was employed to further prevent overfitting.

Performance evaluation and interpretability analysis

Model performance was evaluated using multiple metrics: for segmentation tasks, the Dice coefficient and intersection over union (IoU) were calculated; for classification tasks, accuracy, sensitivity, specificity, F1 score, and the area under the curve (AUC) of the receiver operating characteristic (ROC) curve were computed. A confusion matrix was used to analyze misclassification rates between high-risk and low-risk categories, thereby quantifying the model’s reliability and clinical utility. To enhance interpretability, gradient-weighted class activation mapping (Grad-CAM) visualization generated heatmaps highlighting the key lesion regions identified by the model; these results were validated by clinical experts (Supplementary Figure S5).

Data analysis and model optimization

Subsequent statistical analysis explored the correlation between imaging features and clinical data to identify key variables significantly associated with high-risk events. Dimensionality reduction techniques, such as principal component analysis (PCA), were used to isolate core variables that impact prediction accuracy. The attention mechanism was further refined to optimize the identification of high-risk lesion areas, and iterative improvements to the model architecture – such as the inclusion of deeper convolutional networks or enhanced feature fusion modules – were implemented to boost overall performance (Supplementary Figure S6).

Multicenter clinical data validation

The developed DL model was applied in real-world clinical settings to compare its efficiency and accuracy with traditional expert assessments. Performance was validated using patient datasets to test the model’s generalizability and to examine its effectiveness in differentiating between stable and vulnerable plaques. Ultimately, the model is intended to serve as a clinical decision-support tool for early atherosclerosis risk screening and for guiding personalized treatment strategies.

Results

Data preprocessing and distribution analysis

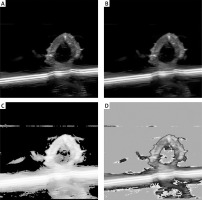

In this study, the quality of multimodal images (ultrasound and CTA) of atherosclerosis was found to directly impact the performance of the DL model. Therefore, preprocessing techniques including denoising, contrast enhancement, and image normalization were employed. Gaussian filtering reduced unnecessary noise, while histogram equalization improved the visibility of plaque lesions. To ensure consistency among the multimodal images used as model inputs, normalization was applied so that the input features shared a unified distribution range. Figure 1 compares raw and preprocessed images, clearly illustrating the improved detail in lesion areas. All images were annotated by experienced radiologists, focusing on plaque shape, location, and type. The annotation data were cross-validated to ensure consistent boundaries and accurate feature delineation. Table I summarizes the sample distribution across the training, validation, and test sets, thereby providing balanced support for subsequent model development. In addition, Table II systematically compares the differences between the model developed in this study and current mainstream methods in terms of data modalities, multi-task design, and performance metrics, further highlighting the innovative aspects of this model.

Figure 1

Comparison of image preprocessing effects. A – Original image: lesion areas appear blurred and noisy. B – After denoising: noise is reduced and vessel boundaries are clear (Gaussian blur denoised). C – After contrast enhancement: plaque regions are significantly enhanced (histogram equalized). D – After normalization: image details are uniformly presented

Table I

Distribution of multimodal data samples

| Dataset | Ultrasound images | CTA images | Clinical feature data | Total |

|---|---|---|---|---|

| Training | 5000 | 3000 | 2000 | 10000 |

| Validation | 1000 | 600 | 400 | 2000 |

| Test | 1000 | 600 | 400 | 2000 |

| Total | 7000 | 4200 | 2800 | 14000 |

Table II

Model performance comparison.

[i] Note: M3-Net (ours) stands for multimodal multi-task network, which fuses ultrasound (US), CTA, and clinical data through an attention-enhanced U-Net segmenter and a ResNet-based classifier to perform joint plaque segmentation and classification. Dice – segmentation Dice coefficient; AUC – area under the ROC curve; Acc – classification accuracy; “–” indicates that the metric is not applicable.

Precise segmentation of arterial lesions with the U-Net model

The U-Net model was applied to segment arterial lesions, achieving an 88% segmentation accuracy (Dice coefficient) on the test set. The results demonstrate the model’s effectiveness in identifying both the core and peripheral areas of atherosclerotic plaques. The introduction of an attention mechanism further enhances the model’s ability to capture subtle lesion details, particularly in complex vascular structures. Figure 2 illustrates the segmentation performance of the U-Net model, with the overlap between the model-generated results and expert annotations exceeding 85%.

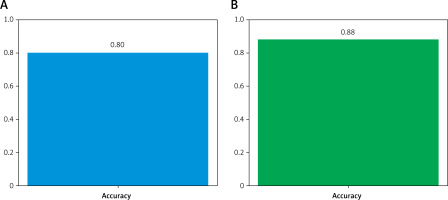

Effective identification of high-risk patients by the classification model

By combining the segmented lesion results with clinical features, the ResNet classification model demonstrated excellent performance in stratifying the risk of CVEs. As shown in Figure 3, the AUC of the ROC curve was 0.97, indicating high discrimination between high-risk and low-risk patients. The model exhibited a sensitivity of 90% and a specificity of 87% in predicting high-risk events such as myocardial infarction or stroke, substantially outperforming traditional imaging diagnostic methods.

Key region identification using Grad-CAM technology

Grad-CAM was used to visualize the model’s decision-making process. Figure 4 shows that the model focuses on areas with dense plaque accumulation and critical regions of vascular narrowing. Clinical validation confirmed that these highlighted high-risk areas correspond closely with regions targeted in clinical interventions, significantly enhancing the model’s interpretability. This visualization tool not only increases clinicians’ confidence in AI-assisted diagnosis but also provides important feedback for further model optimization.

Enhanced high-risk plaque detection with an attention mechanism

The experimental results demonstrated that incorporating an attention mechanism significantly improved the model’s performance in detecting high-risk plaques compared to models without this feature (Figure 5). Analyzing the weight distribution showed that the attention mechanism increases the accuracy of identifying vulnerable plaques by 10 percentage points over traditional models. By focusing on important regions, the attention module reduces information redundancy and supports personalized risk assessments.

Data augmentation and optimization strategies to improve model performance

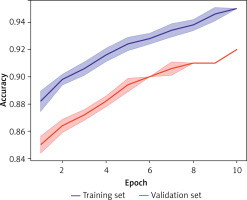

Data augmentation techniques, such as rotation and cropping, in combination with optimization strategies (learning rate adjustment and early stopping), greatly enhance the model’s generalizability and robustness. Figure 6 presents the results of 5-fold cross-validation, with the model achieving an average accuracy above 90% across different data splits. The introduction of weight decay and dropout layers effectively mitigates overfitting, resulting in more reliable performance on the test set.

Figure 6

Analysis of model stability and generalizability. The figure shows accuracy variations during 5-fold cross-validation. The blue line represents the training set; the red line represents the validation set. The model demonstrates stable performance with minimal fluctuations across different data splits

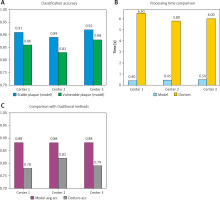

Clinical applicability and multicenter validation

The model demonstrated strong applicability and generalizability in multicenter clinical datasets (Figure 7). It accurately identified and classified both stable and vulnerable plaques. Compared with traditional physician evaluations, the model reduces processing time by over 70%, significantly enhancing clinical diagnostic efficiency. These results support the model’s role as an auxiliary tool in clinical workflows, providing reliable early warning for CVEs.

Figure 7

Analysis of multicenter dataset test results. The figure displays classification accuracies for different lesion types (stable and vulnerable plaques). It compares the model’s processing time with that of traditional physician assessments. It highlights the differences between the model’s performance and conventional evaluations. The curves and bar charts indicate that the model demonstrates stable performance across all centers, with significantly higher efficiency and accuracy than traditional methods

This study also systematically compared the performance of the proposed model with traditional clinical assessments (such as the Framingham risk score and the ASCVD 10-year risk calculator) in terms of accuracy, sensitivity, AUC, and average processing time, further demonstrating the model’s clinical practicality. As shown in Table III, the model’s accuracy on the test set improved from 78% to 92%, sensitivity increased from 75% to 90%, and AUC rose from 0.81 to 0.97. Moreover, the average processing time per case decreased from 6.3 ±1.4 min to 1.9 ±0.4 min (a reduction of 70%, p < 0.001). These results provide strong evidence for the dual advantages of the DL model in both precision and efficiency.

High-risk event prediction and personalized treatment guidance

Further analysis of the model’s predictions revealed that high-risk patient characteristics – such as plaque volume and distribution – are highly correlated with predicted risk values. These insights can inform personalized treatment strategies, such as targeted interventional procedures or pharmacological interventions for high-risk areas. The study validates the model’s potential in formulating precision medicine strategies, offering a novel approach to managing cardiovascular risk.

Discussion

Atherosclerosis is a primary pathological basis of cardiovascular disease, characterized by lipid deposition in the vascular wall, chronic inflammation, and vascular remodeling. Accurate risk stratification of atherosclerotic lesions is essential for early intervention and personalized treatment. Traditional risk assessment methods rely heavily on clinical expertise and imaging interpretation, making them susceptible to subjectivity and limiting their ability to quantify lesion characteristics. A recent systematic review further emphasized that fibrous cap thinning, lipid core expansion, and positive remodeling of high-risk plaques are significantly associated with major adverse cardiovascular events (Gallone et al., 2023; Sarraju and Nissen, 2024); however, these imaging indicators still have not been fully quantified within traditional risk scoring systems [20, 21].

In recent years, DL has shown remarkable progress in medical imaging analysis, particularly in automated lesion segmentation and precise classification [12, 22]. However, fully leveraging multimodal data – including imaging and clinical features – to enhance predictive performance and interpretability remains a major research challenge [23, 24]. This study successfully developed a DL-based risk stratification model for atherosclerosis that excels in lesion segmentation and high-risk event prediction. Specifically, the U-Net model achieved an 88% segmentation accuracy, effectively delineating both the core and peripheral regions of atherosclerotic plaques, while the ResNet classification model – enhanced with an attention mechanism – attained an AUC of 0.97 on the test set. The above results confirm the effectiveness of the proposed model. In comparison, DenseNet can achieve feature reuse under scenarios with low parameter counts, and EfficientNet demonstrates excellent performance in natural image tasks such as ImageNet through compound scaling. However, they are prone to gradient vanishing or overfitting on small medical imaging datasets and have limited capability in capturing high-resolution vascular wall textures. Considering that U-Net’s skip connections can preserve spatial details and ResNet’s residual structure can stabilize deep network training – both of which have been well validated in various medical imaging tasks – we ultimately selected a U-Net + ResNet architecture as the backbone while introducing an attention module to compensate for the lack of long-range dependency information. This decision is quantitatively supported by ablation experiments conducted in this study.

Compared with the current standard risk assessment workflow, which relies on Framingham, ASCVD, and other standardized clinical scoring systems supplemented by expert imaging interpretation, the automated DL framework proposed in this study significantly improves both assessment efficiency and accuracy. Traditional scoring systems focus on systemic risk factors such as lipid levels and blood pressure but inadequately quantify plaque burden and vulnerability factors on imaging. In contrast, our model can automatically perform plaque segmentation and high-risk feature identification without adding extra manual workload, providing imaging-level supplementation to standardized algorithms and thus enabling more refined individualized risk stratification [25, 26].

In comparison with existing DL studies, this work introduced an attention mechanism to focus on high-risk plaque regions while integrating multimodal data (including ultrasound imaging, CTA, and clinical features) to achieve more comprehensive risk stratification. Notably, Lewandowski et al. recently developed a machine learning model to predict in-hospital mortality based on multicenter data from over 3,000 patients with out-of-hospital cardiac arrest [27], validating the clinical value of ML approaches in acute cardiovascular contexts. This finding corroborates and further strengthens the generalizability and external applicability of our model. These innovations not only enhance the predictive performance of the model but also demonstrate excellent interpretability and potential for clinical application.

The study’s findings indicate that the model is highly suitable for clinical practice, serving as an auxiliary diagnostic tool that helps physicians rapidly identify high-risk patients and formulate personalized treatment plans. Notably, the attention mechanism significantly increases sensitivity for early detection of high-risk, vulnerable plaques. Additionally, compared with traditional methods, the model improves analytical efficiency by more than 70%, substantially saving time in clinical workflows. Validation using multicenter data confirmed the model’s robustness across diverse lesion types and patient populations, further enhancing its potential for widespread adoption.

In clinical practice, cardiovascular risk prediction emphasizes multidimensional integration rather than relying solely on imaging parameters. In addition to the traditional indicators already included in this study, such as lipid levels, future work should systematically incorporate other conventional risk factors – including family history, personal medical history, blood pressure, diabetes status, and smoking/alcohol consumption history – and combine them with emerging biomarkers such as inflammatory markers (e.g., hs-CRP), high-sensitivity cardiac troponin I, and NT-proBNP to construct a more comprehensive risk feature space. It is noteworthy that with the rapid development of photon-counting computed tomography (PCCT), its high spectral resolution and low radiation dose advantages in quantifying calcified and soft plaques, combined with the AI framework proposed in this study, are expected to enable non-invasive, refined, full-chain cardiovascular risk assessment, providing more reliable evidence for precision interventions.

Despite the significant progress achieved in multimodal data analysis, some limitations remain. First, because this study only used publicly available databases, there may be systematic differences in sample composition compared with real-world clinical populations (selection bias), which could limit the external validity of the model for broader populations. Second, the model’s reliance on high-quality annotated data may restrict its application in environments with limited or suboptimal annotations. Third, its performance still requires further optimization in certain patient groups, such as those with poor image quality or subtle lesion characteristics. In addition, especially for CTA imaging, factors such as equipment costs, radiation exposure, and clinical indications may limit its accessibility in some regions, posing significant barriers to the model’s global deployment.

Future research will explore incorporating lower-cost, radiation-free, and more accessible imaging modalities such as carotid ultrasound into the training process and assess their combined predictive performance with existing clinical scoring systems to expand the model’s applicability and verify its cross-modal transferability. To address these challenges, upcoming studies should introduce real-world clinical data and prospective cohorts, adopt strategies such as transfer learning and semi-supervised learning to mitigate selection bias, and further enhance model robustness through improved data augmentation and feature fusion techniques.

Model interpretability is critical for clinical acceptance of AI tools [28]. This study employed Grad-CAM to visualize the model’s focus on key lesion regions, generating heatmaps that closely correspond with expert annotations. This feature not only reinforces the credibility of the model’s outputs but also provides clinicians with clear insight into the model’s decision-making process. Feedback from medical professionals indicates that the model’s highlighted lesion areas and high-risk predictions are highly consistent with actual clinical findings, thereby increasing its acceptance in a clinical setting.

To further enhance the model’s practical utility and adaptability, future work could incorporate self-supervised or transfer learning approaches to reduce the reliance on large-scale annotated datasets. Additionally, combining the strengths of conventional statistical methods with DL may further improve high-risk event prediction. Exploring advanced feature fusion techniques for multimodal data and optimizing the model for resource-limited settings – thereby reducing hardware and data demands – could accelerate its global adoption.

This study offers an innovative solution for atherosclerosis risk stratification, fully demonstrating the potential of DL in medical image analysis. With further optimization and broader implementation, the model is expected to become an essential tool for cardiovascular risk assessment and to drive personalized medicine. Future research will focus on extending the application scope and optimizing performance, ultimately providing clinicians with a more reliable and efficient diagnostic aid to improve patient outcomes.